The Productive Role of Recurring Mistakes: Pattern Recognition in Professional Practice

In most professional contexts, mistakes are viewed as events to be avoided, corrected, or ideally prevented altogether. Yet within the realities of complex work—whether in software development, product design, or team management—mistakes often serve a more constructive purpose than we typically acknowledge.

While isolated errors may seem like noise, recurring mistakes offer valuable insight. They reveal patterns, expose flawed assumptions, and challenge mental models. Through recurrence, professionals can move beyond surface-level corrections and begin to engage in deeper, systemic improvement.

Beyond the One-Off Error

In simple systems, mistakes may have obvious causes and equally obvious fixes. But complex systems—those involving multiple actors, shifting variables, or layered abstractions—rarely provide such clarity. When a problem arises once, it may be dismissed as a fluke. When it recurs, however, it demands attention.

Recurrence is not necessarily a sign of incompetence or negligence. Instead, it may indicate that something within the system is consistently misaligned: a process misunderstood, a design misinterpreted, or an assumption invalid under real-world conditions.

These repeated breakdowns serve a diagnostic function. They point not just to what went wrong, but to why it continues to go wrong. This shift in perspective—from treating errors as isolated events to seeing them as symptoms of broader patterns—is essential for developing mature, adaptive systems.

The Legacy of Negative Framing

From an early age, many people are taught that mistakes are personal failings. In most traditional school settings, the focus is on right answers, correct methods, and avoiding error. Mistakes are highlighted, marked in red, or used as evidence of falling short. Rarely are they treated as the essential raw material for learning.

This mindset often carries into adult life. Many professionals feel shame or fear when making mistakes—even small, everyday ones—because they have internalized the idea that getting things wrong signals weakness, stupidity, or lack of preparedness. This cultural framing is not just unhelpful; it’s actively harmful.

When we treat human error as a defect of character, we create environments where people feel the need to hide their mistakes, avoid risk, or retreat from learning opportunities. In contrast, organizations that cultivate psychological safety—where mistakes can be discussed openly and constructively—consistently outperform those that don’t.

Professional growth depends on being wrong in the open, and learning why. Reframing mistakes as informative rather than shameful is not only more humane—it’s more effective.

The Cost of Dismissing Recurrence

In some environments, recurring mistakes are met with little more than impatience or punitive action. People are shuffled out, replaced, or quietly sidelined. New workers arrive, only to find themselves navigating the same landscape—and making the same missteps.

Over time, I’ve observed what can only be described as a conveyor belt of talent, where individuals are discarded not because they are incapable, but because they are placed into flawed systems that do not evolve. Instead of investigating the recurring issues, or asking what the system might be failing to support or reveal, responsibility is shifted solely onto the individual.

This cycle is counterproductive for both the worker and the organization. For the worker, an opportunity for growth and mastery is denied. For the company, institutional memory is lost, lessons go unlearned, and the same inefficiencies repeat under a different name.

When recurring mistakes are met with rotation instead of reflection, everyone loses—and the system stays broken.

The Limits of Front-Loaded Knowledge

A common belief, especially in hierarchical or rigidly structured organizations, is that mistakes should be preemptively eliminated through training, education, or process documentation. While these are undoubtedly important, they cannot account for the unpredictable nature of real-world environments.

No amount of formal education can fully prepare someone for the nuance and variability of live systems, evolving teams, or human behavior. Professionals must often construct knowledge through interaction with the system itself, encountering its boundaries, surprises, and contradictions firsthand.

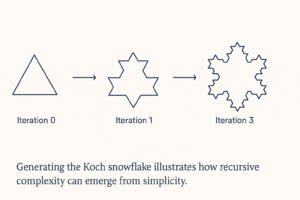

Mistakes—especially those that recur—play a key role in that construction process. They surface information that was previously unknown, untested, or misunderstood. Through that surfacing, the system teaches us how it really works, as opposed to how we thought it did.

Recurrence as a Tool for Design and Process Improvement

In design disciplines, recurrence often manifests through repeated user confusion, friction, or misinterpretation. These are not just isolated lapses, but indicators that something in the system invites misunderstanding. Rather than correcting users, designers must recognize that the system is not communicating clearly.

Similarly, in development or operations, repeated failures may reflect a disconnect between the intent of the system and its actual behavior under variable conditions. Recurring faults in implementation, integration, or deployment highlight points of fragility that are not always visible during initial planning or isolated testing.

In management, repeated miscommunication, unmet expectations, or process breakdowns often trace back to assumptions that were never aligned. Addressing these issues reactively treats only the symptoms. Allowing patterns to emerge over time makes it possible to identify structural causes and introduce meaningful change.

A Case for Slower Correction

There is a tendency in fast-paced environments to prioritize immediate correction. While this can be useful for triage, it often short-circuits learning. Not all problems should be solved at first sight. In some cases, allowing a mistake to recur—safely and observably—provides the data needed to address it at the level of principle rather than procedure.

This is not about tolerating negligence or accepting harm. It is about recognizing that premature fixes may obscure patterns, and that meaningful correction often depends on the emergence of those patterns over time.

Toward Systemic Learning

This idea aligns with established models in organizational learning, such as double-loop learning, where the aim is not simply to change behavior but to challenge the underlying assumptions and rules that govern behavior.

Recurring mistakes prompt this kind of reflection. They demand not just a better answer, but a better question: What system dynamics are producing this result, and what needs to change for the result itself to disappear?

Seen this way, recurrence is not a failure of professionalism. It is a pathway to greater insight—a signal that the system is offering a lesson we have not yet learned.

Conclusion: Rethinking Professionalism

The idea that professionals should not make mistakes—or that all relevant mistakes should have been “learned from at school”—reflects a flawed and outdated understanding of how expertise develops.

In both professional and personal contexts, human error is not a weakness—it’s a window. It reveals where we are still growing, where systems are still opaque, and where opportunities for improvement remain hidden.

By embracing recurrence, acknowledging the legitimacy of everyday mistakes, and creating environments where error is met with curiosity instead of shame, we can build more adaptive teams, better systems, and more humane workplaces.

After all, not everything worth learning can be taught in advance.

Some lessons are only revealed through experience—mistake by mistake.